Affordable plans with simple pricing

Credit Packs

What are Credits?Starter (150k Credits)

≈94 Articles or ≈112,500 processed words

Basic (300k Credits)

≈188 Articles or ≈225,000 processed words

Save 20%

Plus (600k Credits)

≈375 Articles or ≈450,000 processed words

Save 30%

Pro (1M Credits)

≈625 Articles or ≈750,000 processed words

Save 40%

Subscriptions

Basic (300k Credits / month)

≈188 Articles or ≈225,000 processed words

Save 20%

Plus (600k Credits / month)

≈375 Articles or ≈450,000 processed words

Save 30%

Pro (1M Credits / month)

≈625 Articles or ≈750,000 processed words

Save 40%

Beta User Special Offer

Are you a Researcher? Join the Aginsi Beta Program and

Get Bulk Credits at Cost!

Credits are non-refundable. By proceeding, you agree to the Return Policy.

Article estimations are assuming the the average English language article length is 1,200 words.

What are Credits?

Credits correspond to tokens, which are common sequences of characters found in text. A token translates to roughly ¾ of a word, so 100 tokens ~= 75 words (in languages with non Latin alphabets like Greek, Arabic, Chinese, etc. words and characters produce more tokens). Processed words are tokenized inputs (e.g. an article and prompt) and outputs (e.g. a summary). You can calculate the number of tokens in a sentence using OpenAI's Tokenizer.

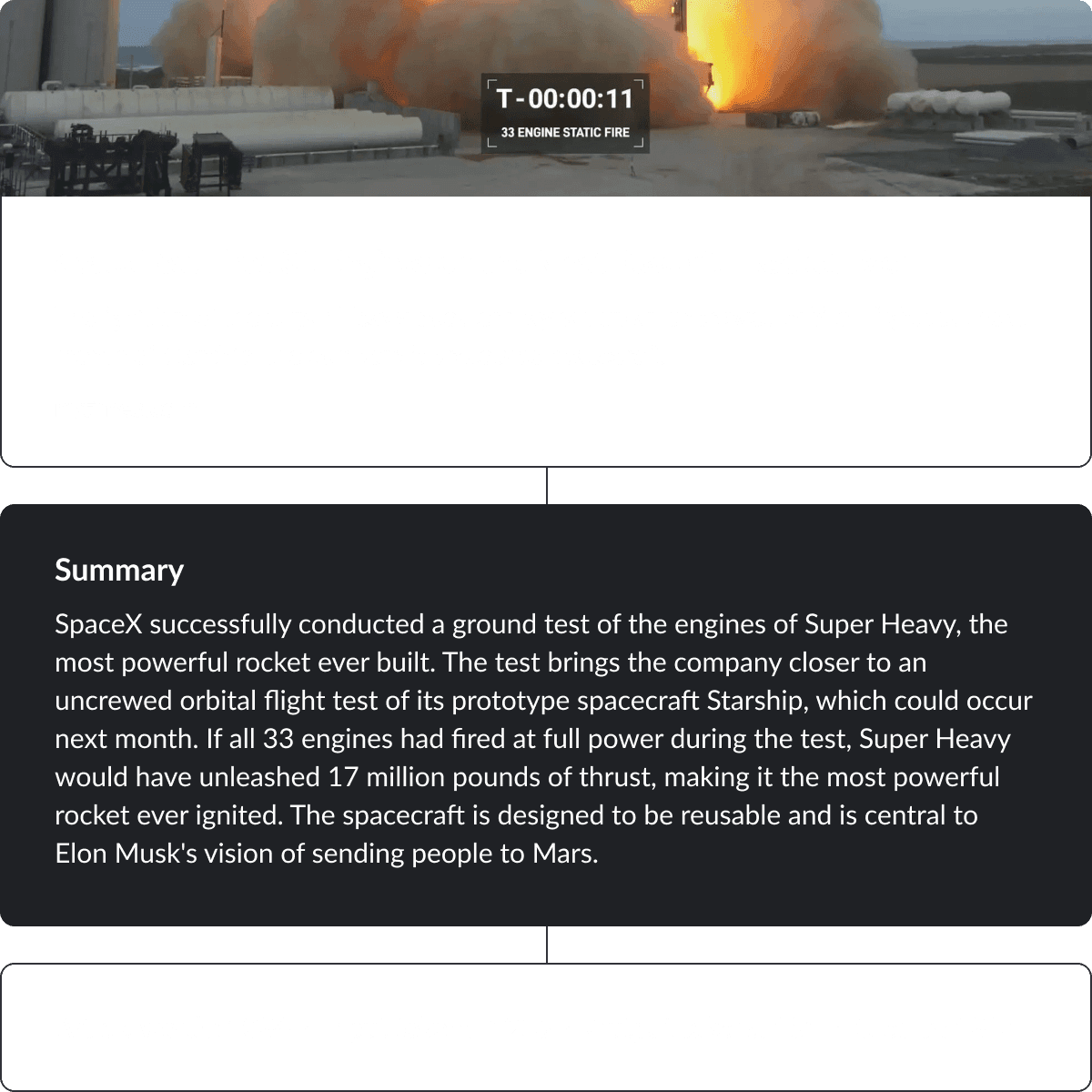

Below is an example of a ~1,000 word New York Times article. A short summary costed 1,314 credits.